Ohio State College Researchers contemplate AI fashions capability

Think about -you be taught the idea of flower with out ever smelling a rose or brushing your fingers on its petals. Might we have the ability to kind a psychological picture or describe its traits, would we actually perceive its idea? That is the important query addressed in a current research by the Ohio State College, investigating whether or not huge language fashions Chatgpt And Gemini can characterize human ideas with out experiencing the world by means of the physique. The reply, based on Ohio researchers and collaborating establishments, is that this isn’t fully doable.

The outcomes counsel that even probably the most superior Instruments you’ve The sensorimoter who affords human ideas are nonetheless missing. Whereas massive language fashions are remarkably good in figuring out fashions, classes and relationships in language, typically surpassing folks in strictly verbal or statistical duties, the research reveals a constant deficit in relation to ideas rooted within the sensorymotory expertise. And, due to this fact, when an idea includes senses such because the scent or contact or bodily actions akin to holding, motion or interplay, plainly the language alone is just not sufficient.

All pictures of Pavel Danilyuk’s kindness

Chatgpt & Gemini couldn’t totally perceive the idea of flower

researchers at The Ohio State College examined 4 main AI-GPT-Three.5, GPT-Four, Palm and Gamini-on a knowledge set of over Four,400 phrases that folks have beforehand evaluated on totally different conceptual dimensions. These dimensions fluctuate from summary qualities, akin to “imaginability” and “emotional pleasure”, to probably the most based, akin to how a lot an idea by means of senses or motion is skilled.

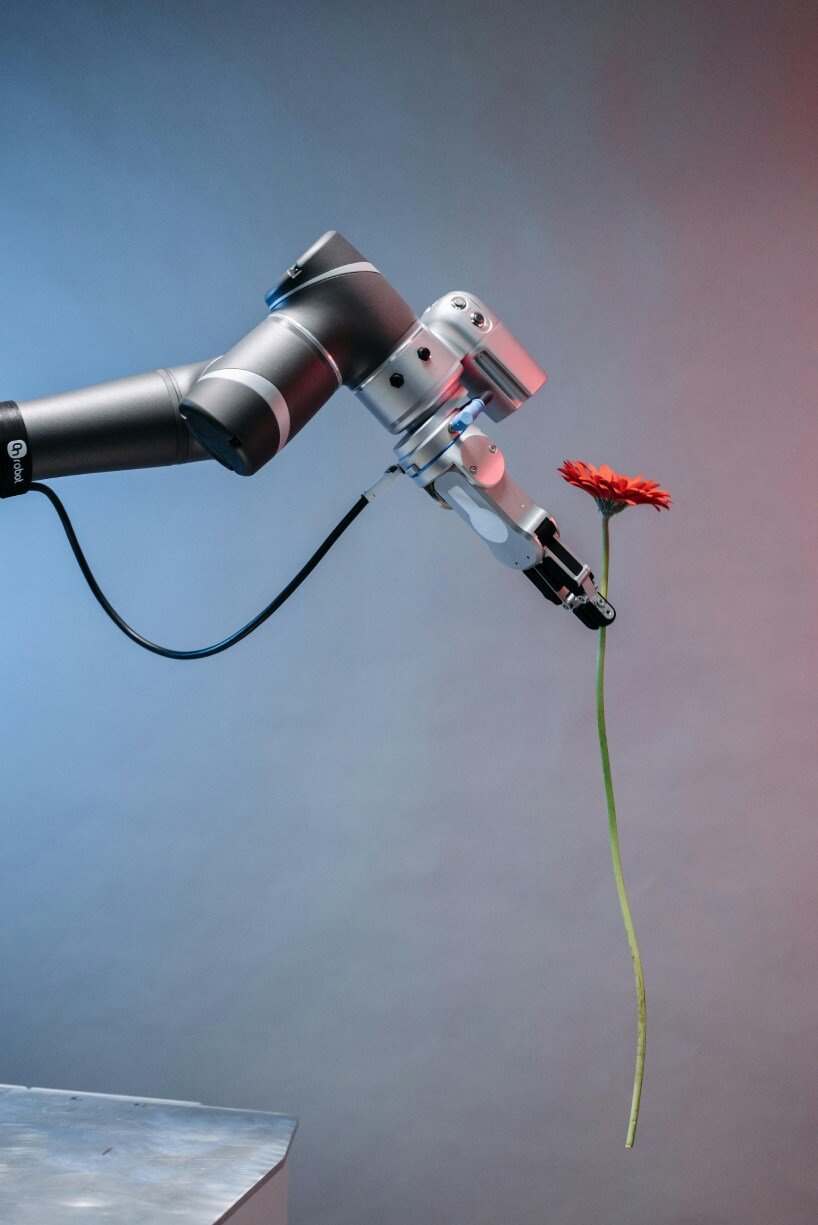

Phrases akin to “flower”, “hoof”, “swing” or “humor” have been then famous by each folks and fashions for the way effectively they aligned with every dimension. Whereas massive language fashions have proven a robust alignment in non-sensory classes, akin to imaginability or valence, their efficiency has decreased considerably when sensory or motor qualities have been concerned. A flower may very well be acknowledged as one thing visible, for instance, however you’ve endeavored to completely characterize the built-in bodily experiences that most individuals naturally affiliate. “A giant language mannequin can’t scent a rose, contact the petals of a daisy or stroll by means of a wild flower area.” says Qihui Xu, the primary creator of the research. “They get what they know, consuming massive quantities of textual content – order of magnitude larger than what a person in life is uncovered – and nonetheless can’t shock some ideas as folks do.”

investigating if huge language fashions like Chatgpt and twins can precisely characterize human ideas

The position of senses and physique expertise in thought

The research, Lately printed in The human conduct of nature reaches an extended -lasting cognitive scientific debate, which wonders if we will kind ideas with out substantiating them in physique expertise. Some theories counsel that folks, particularly these with sensory deficiencies, can construct wealthy conceptual frames utilizing language alone. However others declare that bodily interplay with the world is inseparable from how we perceive it. A flower on this context is perceived past its kind as an object. It’s a set of sensory triggers and embodied reminiscences, for instance the feeling of daylight in your pores and skin or the second to cease to scent a flower, which comes with emotional associations with gardens, presents, ache or vacation. These are multimodal experiences, multisensory, and this can be a present language akin to GPT and Gemini, skilled particularly on the Web textual content, can solely be permitted.

Talking with their potential, nevertheless, to part of the research reveals that the fashions have precisely linked the roses and pastes, each being “with a sea”. However individuals are unlikely to consider them as conceptual related, as a result of we don’t examine solely objects by means of distinctive attributes, however we use a multidimensional community of experiences that embrace how issues really feel, what we do with them and what it means to us.

The research by the Ohio State College means that these fashions can’t perceive the sensory human experiences

The way forward for huge language fashions and has embodied you’ve

Curiously, the research additionally discovered that the fashions skilled each on the textual content and on the photographs have behaved higher in sure sensory classes, particularly in imaginative and prescient dimensions. This means the longer term eventualities by which the multimodal coaching (combining the textual content, the photographs and, lastly, the sensor information) may assist the fashions method the human -like understanding. Nevertheless, the researchers are cautious. As Qihui Xu notes, even with the picture information, you haven’t any “– Half, which consists of how the ideas are shaped by motion and interplay.

The mixing of robotics, sensor know-how and the embodied interplay may finally transfer to one of these understanding. However for now, human expertise stays a lot richer than what language fashions – regardless of how huge or superior – they will reproduce.

In part of the research, the fashions have precisely linked the roses and pasta, as “the” excessive “scent